Most of us as consumers and earners would like to keep a track of our personal financial transactions. However, it can be a tedious practice as the volume of transactions is high, diverse and requires parsing of many files. Through this article, we will be introduced to three utilities which can be used to make this job easier for us.

The three powerful text processing command-line utilities, grep, sed, and awk, available in the GNU Bash Shell and commonly available in GNU/Linux operating systems can help us keep track of personal spending and earnings. Windows 10 users can use the Ubuntu on Windows feature to utilise the bash shell. See https://bit.ly/UbuntuOnWin for instructions on how to download this in the Windows 10 environment.

Let us go through the process step-by-step.

All examples in this article make use of the sample data set Expenses.csv containing personal income and expenditure information. (The dataset is available here https://bit.ly/incomedataset). This dataset has the following structure.

Let us start with our first utility, grep, a powerful search utility that can be applied to text documents. The general form of using grep is

$ grep search_pattern file_list

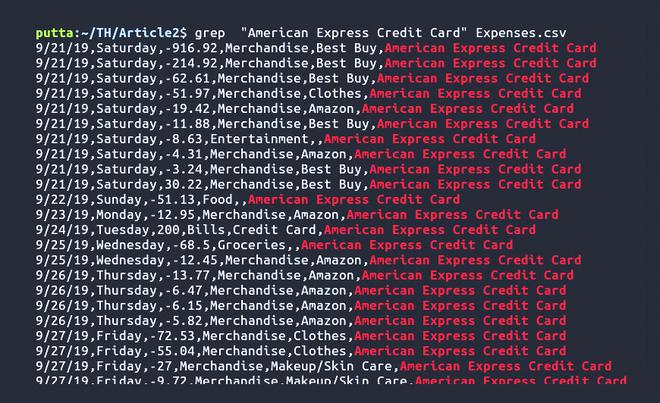

Imagine we want to list all transactions on an American Express credit card. You can achieve that by giving the following command.

$ grep “American Express Credit Card” Expenses.csv

One has to note that the search parameter should be included within double quotes if it consists of any white space. Otherwise, quotes are not necessary.

Sometimes one would be more interested in getting the number of transactions of a particular type; let's say you want to count the number of pharmacy related transactions. The following command can achieve this.

$ grep -c Pharmacy Expenses.csv

Another common thing we encounter is that we do not remember filenames that contain a specific text in a particular directory. Here also grep can come to the rescue; all you have to do is issue a command with the following syntax.

$ grep -lr search_pattern folder

Suppose we want to list all files recursively within the Article2 directory containing the pattern awk; the following command achieves that.

$ grep -lr awk Article2/

As we have seen so far, grep can be used only as a search utility and cannot be used to modify the file contents. Here is where the second utility sed comes in handy. sed is a stream editor used to apply text transformations. When we display the contents of Expenses.csv using the cat command (a command which displays the contents of one or more files without having to open the file for editing), the content may appear closely packed, making it difficult to view the contents. So, let's see how sed handles this in the following command.

$ sed -e 'i\ ' Expenses.csv

It will result in an empty line being added before each line of text, making it easy for the eye to view the contents.

One can obtain double spacing by using the following command.

$ sed -e 'i\\n' Expenses.csv

Now let's see how to highlight lines having a specific word. Suppose we want all Food related transactions to be underlined, issue the following command.

$ sed -e '/Food/a\t=====================' Expenses2.csv

Even more emphasis can be achieved by the following command

$ sed -e '/Food/a============'-e'/Food/i============' Expenses.csv

The following example shows how we can segregate transactions of a particular type, lets say those related to groceries. If we we want all grocery related purchases to be written to a separate file say Groceries.csv.

$ sed -n '/Groceries/w Groceries.csv' Expenses.csv

sed can also be used to perform string substitution. For example, if we want to change all the entries of 'Other' to 'Miscellaneous', it can be done as follows

$ sed -n '/Other/s//Miscellaneous/' Expenses.csv

So far, we have seen text processing capabilities of sed. It is now time to look into the awk utility, which is more potent because it is highly programmable. It provides programmable constructs like conditionals, looping, formatting, etc. In our earlier examples, the way text was displayed was not pleasing to the eye. We will now format the content such that its display is more aesthetic. The following awk command shows its formatting capabilities.

$ awk -F"," 'NR > 1 {printf("%10s\t%.3s %16.2f %-10.10s\t %-30s\n", $1, $2, $3, $4, $6)}' Expenses.csv

Now let us look at slightly more complex examples; let's say we want to list the various types of expense categories present in the file. To do this, we should focus on the fourth column, which indicates the transaction category. Additionally, we want only the unique values from this column. We can achieve it as follows. First, we fetch the contents of the fourth column, sort it and later eliminate duplicates using the uniq command.

$ awk -F "," 'NR>1 {print $4}' Expenses.csv | sort | uniq

Suppose we want to find the highest expenditure among all our transactions. This would require more instructions therefore, let us write it as a script named FetchMax.awk.

$ awk -f FetchMax.awk Expenses.csv

Next, let us find the total expenditure for the Merchandise category. The script file CategoryExpenses.awk is shown below.

Use the command , $ awk -f CategoryExpenses.awk Expenses.csv

We can improve the script much further by specifying the category during runtime, shown in CategoryExp2.awk.

$ awk -v category=”Groceries” -f CategoryExpenses2.awk Expenses.csv

We have seen how the three utilities grep, sed and awk form a powerful triad that is essential for anyone dealing with text processing on large texts. sed is best for string processing, and awk is better at handling columnar data. These can be further combined together to achieve even more complex tasks. To sum it up, this troika of utilities is like a swiss knife in the armory of a data analyst.

The author is a research scholar at the Indian Institute of Technology, Hyderabad